By Anurag Rana and Andrew Girard

November 18, 2025

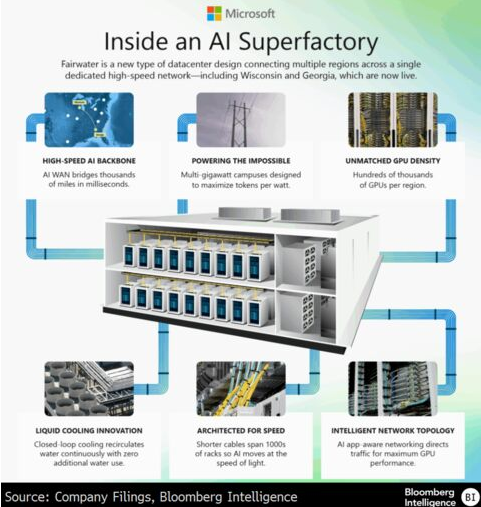

Microsoft’s AI infrastructure strategy stands out in a market that’s grappling with fears of overbuilding data centers, as the software provider boasts industry-leading AI sales and a growing backlog of nearly $400 billion. Microsoft’s first AI superfactory opened on Nov. 12 and can be workload-flexible, toggling between training and inference and reducing the risk of short-term obsolescence.

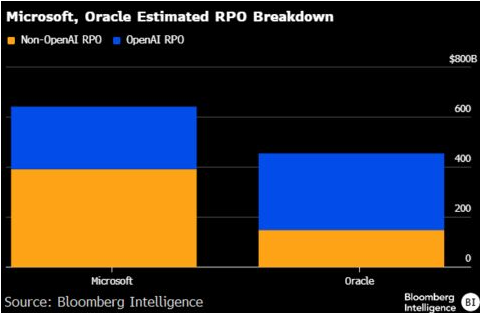

Microsoft’s data-center expansion strategy keeps it ahead of rivals, supported by industry-leading AI sales and commercial remaining performance obligations (RPO) of $392 billion — up 51% from a year earlier as of Oct. 29. Management noted that Azure constant-currency growth of 39% in fiscal 1Q would have been higher if not for capacity constraints. Therefore, the capital spending ramp-up which we calculate at around $145 billion for fiscal 2026 vs. Azure sales of roughly $100 billion is backed by a spike in highly visible demand.

Widely publicized OpenAI spending plans of $1.4 trillion over the next eight years (about $250 billion to Microsoft) seem excessive considering its expected year-ending annualized revenue of above $20 billion. This is fueling concerns over its ability to make good on payments in the medium term.

Microsoft unveiled its first AI superfactory on Nov. 12 in Atlanta. The superfactory is a network of connected data centers in Georgia and Wisconsin designed to handle massive workloads, like training large language models. These facilities are built on Microsoft’s Fairwater architecture, and the framework reduces risk if, for example, an AI lab is unable to fulfill its training-contract commitments. In such a scenario, the company can reduce its capital spending and shift data-center resources toward inferencing, which caters to its various copilot products. Microsoft can also use it to provide infrastructure to its own AI research.

Microsoft’s backlog of $392 billion doesn’t include the $250 billion commitment made by OpenAI, which most likely spans 7-8 years. Microsoft is OpenAI’s biggest cloud partner, and our analysis suggests most of that revenue will be from long-tail inferencing work and not training. In contrast, we estimate around two-thirds of Oracle’s $455 billion RPO to be from OpenAI, with the majority of this revenue stream tied to training workloads.

In the long run we expect the mix of AI workloads to skew 80-90% toward inference from predominantly training today. This will be true especially if more use cases are introduced as the cost of compute comes down.